Teach LLM tool use

🎁In OpenFunctions-v2, we natively train the model to support parallel functions (generate multiple functions at a time) and multiple functions (select one or more functions). Java/REST/Python APIs are also supported for the first time with extended data types📷

- Read More: Blog

- How well to other function-calling models perform: Berkeley Function Calling Leaderboard

- Play with the model online: Gorilla OpenFunctions-v2 Web Demo

- Check out the project: GitHub Code

- Model (6.91B) on HuggingFace 🤗: gorilla-llm/gorilla-openfunctions-v2

Benchmarking LLMs on function calling capabilities

🏆 Berkeley Function-Calling Leaderboard (BFCL) 📊 aims to provide a thorough study of the function-calling capability of different LLMs. It consists of 2k 📝 question-function-answer pairs with multiple languages (🐍 Python, ☕ Java, 🟨 JavaScript, 🌐 REST API), diverse application domains, and complex use cases (multiple and parallel function calls). We also investigate function relevance detection 🕵️♂️, to determine how the model will react when the provided function is not suitable to answer the user's question.

- Read More: Blog

- Live Leaderboard: Website

- BFCL Evaluation Dataset: HuggingFace Dataset 🤗

- Gradio Demo: HuggingFace Space 🤗

- Reproducibility: Github Code

Better way to do RAG

RAFT: Retriever-Aware FineTuning for domain-specific RAG 🚀 Drawing parallels between LLMs and students in open-book (RAG) 📔 and closed-book exams (SFT) 🧠, we present a better recipe for fine-tuning a base LLM for RAG-focused challenges. Discover how RAFT prepares LLMs to excel with a specific document set, mirroring students' prep for finals! 🎓

- Read More: Blog

- Read More: MSFT-META Blog

- Paper: https://arxiv.org/abs/2403.10131

- Reproducibility: Github Code

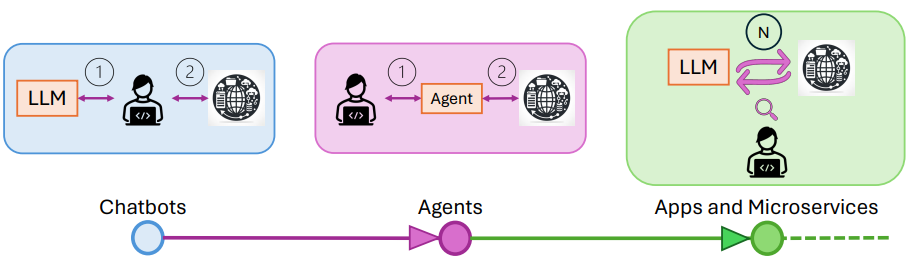

Runtime for executing LLM-generated actions

Gorilla Execution Engine (GoEX) is a runtime for LLM-generated actions like code, API calls, and more. Featuring "post-facto validation" for assessing LLM actions after execution 🔍 Key to our approach is "undo" 🔄 and "damage confinement" abstractions to manage unintended actions & risks. This paves the way for fully autonomous LLM agents, enhancing interaction between apps & services with human-out-of-loop🚀

- Read More: Blog

- Paper: https://arxiv.org/abs/2404.06921

- Try it out: Web Demo

- Reproducibility: GitHub Code